BRYAN BOATNER

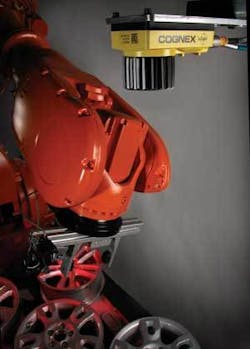

For simple vision-guided robotic (VGR) applications, a VGR package from a robot supplier can in some cases reduce integration time. Typically, however, companies experienced in industrial machine-vision provide the broadest range of machine-vision technology, with the most reliable vision tools for part location, inspection, measurement, and code reading.

The first step in any machine-vision application—and the one that usually determines whether the application succeeds or fails—involves locating the part within the camera’s field of view. Vision-guided robotic or inspection performance is significantly limited when a vision sensor can’t provide repeatable part location because of process variability. It is therefore important to become familiar with factors that can cause parts to vary in appearance because part variability can make pattern matching for accurate part location extremely challenging.

Part location

Traditional pattern-matching technology relies upon a pixel-grid analysis commonly known as normalized correlation. This method looks for statistical similarity between a gray-level model (or reference image) of a part and portions of the image, to determine the part’s x-y position. Though effective in certain situations, this approach limits the ability to find parts and the accuracy with which they can be found, under conditions of varying appearance that are common to production lines as mentioned above.

Geometric pattern-matching technology, in contrast, learns a part’s geometry using a set of boundary curves that are not tied to a pixel grid. The software then looks for similar shapes in the image without relying on specific gray levels, yielding a major improvement in the ability to accurately find parts despite changes in angle, size, and shading.

So geometric pattern matching becomes almost essential to ensure accurate and consistent part location when many variables alter the way a part appears to a vision system. While several machine-vision companies offer geometric pattern matching, each vendor interprets the concept in a proprietary way. So performance characteristics vary widely, potentially making it difficult to determine the best suited machine-vision software for a particular application.

Yield, its importance and quantification

Yield is defined as the fraction of images for which the vision system either finds the correct part if one is present, or reports that no part has been found if one is not present. Yield depends on the quality of the images presented to the vision system and on the capabilities of the pattern-recognition methods in use.

High yield is the single most important property of a pattern-recognition method for use in robotic guidance. Failures may result in lost productivity as production stops for human assistance, damage to or destruction of valuable parts, and even damage to production equipment. The cost of failures over the life of a vision system is generally far greater than the original purchase price.

No vendor can specify pattern-matching yield in advance. Yield can only be measured under a given set of conditions. A vision system operating at typical production speeds can be expected to see anywhere between one and ten billion images in its lifetime, representing conditions that are not easy to predict at the outset. Since yield cannot be predicted, and a billion images cannot be evaluated, some other strategy must be used to obtain reasonable confidence in the capabilities of the vision system.

One useful strategy is to choose a variety of samples and test for a wider range of conditions than you expect to find in your application. Vary the part presentation angle and distance from camera. Vary the focus and illumination. Add shadows, occlusions, and confusing background. Another possible strategy is to record the images used for your evaluation, including the training images. This process provides a consistent foundation from which to compare competing systems, and to compare different parameter settings. A third approach is to ask your vendor for advice in using his or her product. An experienced vendor will have seen similar applications and will be able to offer advice in achieving the highest yield.

Geometric pattern-matching accuracy

Pattern-matching accuracy can be defined as the statistical difference between the pose (position, angle, and size) of a part reported by the vision system and its true pose. Generally, accuracy is reported using “3-sigma” (3σ) values, which means that three standard deviations (99.7%) of reported values can be expected to be within the stated accuracy of the true value. Pattern-matching accuracy is surprisingly difficult to specify and measure. The accuracy obtained in practice depends on several factors.

One of these factors is that the capacity of a part to convey information about position, angle, and size, which depends on the size and shape of the part, is a fact of geometry. Nearly circular parts provide little information about angle. Another is the quality of the images, and particularly the extent to which the part shape matches the trained pattern, which determines the extent to which the pose information is corrupted. A third factor is the ability of the pattern-matching method to extract pose information from images with varying degrees of corruption.

Clearly vendors have no control over the first two factors, and thus cannot specify in advance the accuracy that will be obtained. Therefore, all vendor-supplied accuracy claims represent somewhat ideal conditions of part shape and image quality. Such claims are useful and meaningful if the test conditions are not so artificial as to be unrealistic, and if the tests are conducted with sufficient care and sophistication. Ask vendors how accuracy numbers were obtained, and remember that for a given application, accuracy cannot be predicted but must be measured.

Testing accuracy during evaluation

The hardest part of testing accuracy lies in knowing what the result should be, particularly as the part is moved. One simple test involves capturing many images without moving the part or changing any other conditions, and measuring the standard deviation of the reported pose. This is probably the least useful of available tests, however, because only the effect of image noise is measured and most pattern-matching methods are fairly immune to uncorrelated noise.

Another possible test involves holding the part stationary but varying illumination (or lens aperture) and focus, while moving something over the part. The true pose does not change, and a good geometric pattern-matching system should report consistent poses even under these conditions.

Other testing alternatives include moving the part using an x-y stage and measuring the variation in reported angle and size; rotating the part and measuring the variation in reported size; and training two targets on a part, rotating the part, and measuring the variation in reported distance between the targets.

Determining accuracy requires considerable measurement sophistication because of several factors. Results are meaningless unless the part is moved relative to the pixel grid, since grid quantization effects have a far greater effect on accuracy than image noise. Also, geometric pattern-matching accuracy is (or should be) so high that it is extremely difficult to move parts by amounts that are known more accurately than the errors that you are trying to measure. In addition, geometric pattern-matching accuracy is so high that the effects of lens distortion and sensor pixel aspect ratio are significant and must be accounted for.

Vision-to-robot calibration

Once vision-sensor yield and accuracy are determined, calibration between the vision system’s pixel-based coordinate system and the robot’s coordinate system is vital for success. Whether the application involves conveyor tracking for pick-and-place, palletizing, or component assembly, vision-to-robot calibration is required to maintain system accuracy and repeatability.

Calibration is one of the biggest challenges because it involves more than coming up with a scaling factor that relates pixels to a measured dimension. If there’s optical distortion from the lens, or perspective changes due to camera mounting angle, the vision software must include special algorithms to correct for these distortions in the image.

In the past, calibration has been somewhat cumbersome, but standard practices have evolved to facilitate the task. The most advanced vision software now incorporates step-by-step wizard functionality to guide users through the process of correlating image pixels to robot coordinates using a variety of techniques including grid of dots, checkerboard, or custom calibration plates.

The latest software also supports multi-pose two-dimensional calibration to optimize system accuracy and enable the use of a smaller, more manageable calibration plate in large field-of-view applications.

BRYAN BOATNER is product marketing manager for In-Sight vision sensors at Cognex, 1 Vision Dr., Natick, MA 01760; e-mail: [email protected]; www.cognex.com.